Supervisors focus attention on AI

Banks must follow suit – BaFin defines minimum requirements for use of AI

The purpose of the publication is to inform financial institutions early on about the preliminary considerations surrounding possible minimum requirements for the use of AI. It is important to emphasize the preliminary nature of the paper, which has not yet been adopted as law, but provides extensive insights into BaFin’s way of thinking with regard to AI. It can therefore serve as a roadmap.

BaFin has divided its comments into three sections:

- Key principles

- Specific principles for the development phase

- Specific principles for the application phase

This demonstrates that BaFin is fully aware of the difference between developing an AI application and running it in production. Both phases are fundamentally different from the way conventional applications are developed and supported.

Definition of artificial intelligence and how to distinguish it from conventional methods

At the beginning of the paper, BaFin first of all attempts to define the term “artificial intelligence” and states that, at this point in time, it cannot yet be clearly distinguished from conventional statistical methods.

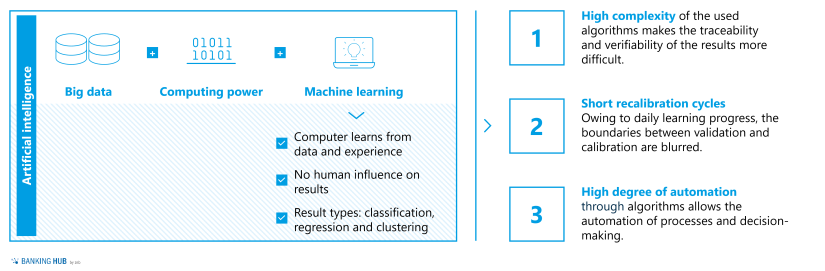

BaFin understands artificial intelligence to be the symbiosis of big data, high computing power and machine learning. Machine learning is an umbrella term for methods of data-driven learning. They are differentiated according to the various types of algorithms, results (classification, regression and clustering) and data (text, speech, image data).

Since machine learning relies on statistical models that are already used in the form of traditional regressions in financial institutions, the principles defined in this paper basically apply to all types of decision-making processes supported by algorithms. This also includes already established statistical decision-making models. Therefore, BaFin recognizes a need to differentiate artificial intelligence from these conventional methods. For this purpose, three key features that characterize artificial intelligence have been defined:

- High complexity of the underlying algorithms

- Short recalibration cycles

- High level of automation

Nevertheless, this definition still fails to provide a clear distinction from the statistical regression models already used in banks. In consequence, it is expected that the supervisor will refine the definition in the future.

BankingHub-Newsletter

Analyses, articles and interviews about trends & innovation in banking delivered right to your inbox every 2-3 weeks

"(Required)" indicates required fields

BaFin’s AI principles

Key principles for the use of artificial intelligence

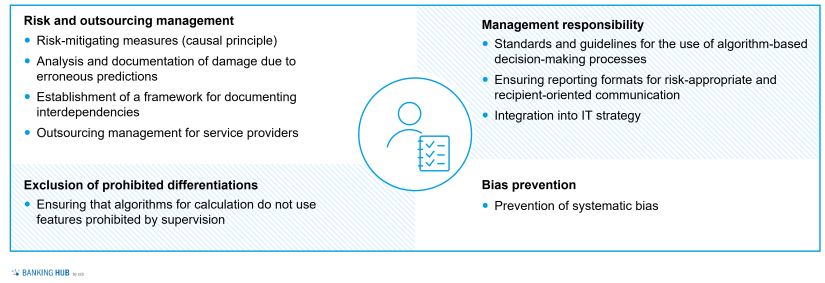

Senior management must define guidelines for the use of artificial intelligence. These guidelines should capture the potential as well as the limitations and risks of the technology, define risk mitigating measures and expand existing IT strategies accordingly.

To this end, a comprehensive analysis of potential damages due to both immediate erroneous algorithm-based decisions and interdependencies between different AI applications must be conducted. In order to meet these requirements adequately, the necessary technical understanding of artificial intelligence must be established within the management board.

In addition to defining responsibilities in the management board, BaFin’s key principles emphasize the importance of preventing systematic bias and ruling out existing differentiations that are prohibited by law. Compliance with these principles essentially depends on the underlying training data.

Systematic bias is a bias of AI caused by erroneous or incomplete data or by inadequate processing. In such cases, the computer adopts the conscious or unconscious human bias and derives logical conclusions from it. As a result, groups of people, ethnicities or minorities may be systematically discriminated against and disadvantaged.

A well-known example of bias caused by artificial intelligence is the preference for white male candidates in filling job vacancies at an online retailer. The algorithm had concluded from the training data that white men worked more successfully in the company. However, this result was biased because of the underlying training data. Such discrimination can be rooted in human prejudice or in the selection of certain features that are no longer allowed to be used for differentiation under existing laws.

This example demonstrates how important it is to properly select and prepare training data. At the same time, it highlights the limitations of artificial intelligence: the computer not only learns to recognize connections, it also learns our biases and preferences. To ensure that the enormous responsibility that comes with the selection of data is met, BaFin stipulates an overarching review process, which is meant to prevent any discrimination in the institution. Financial institutions thus need to develop an even greater interest in high quality data. In this way, they are given the chance to detect previously established discrimination in processes and increase the fairness of decisions in the future.

Specific principles for the development phase

In addition to overarching guidelines, principles are defined both for the development of AI applications and for their subsequent use.

BaFin calls for the documentation and storage of the training data as well as high data quality and sufficient quantity. Only this data storage makes it possible to subsequently identify data that has led to bias in AI decisions and to remove it accordingly from the training data set in a validation process.

This ensures the traceability and reproducibility of AI results, although it should be kept in mind that especially complex models for AI, such as neural networks, can hardly meet the traceability requirement. BaFin thus sets limits for financial institutions with respect to the complexity of AI – and consequently reduces the associated opportunities arising from its use.

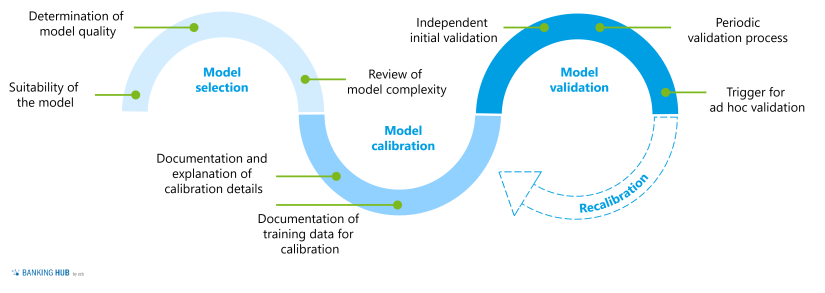

The introduction of AI solutions requires not only the selection of high-quality training data, but also, for example, the preparation of this training data, the selection of suitable algorithms and the definition of parameters. Only by trying out different models can a suitable AI be developed. Therefore, BaFin requires the documentation and explainability of the model selection, model calibration and model validation and thus indirectly also the review of several models to identify a suitable one.

Specific principles for the application – human control is key

With the help of artificial intelligence, processes can be automated and decisions made by the computer. To ensure high-quality decisions and to interpret them, regular validation and constant human control are essential. BaFin recognizes their important role and defines several minimum requirements.

Human control mechanisms are to be ensured by the following activities (minimum requirement):

- Since AI results are used as input for other AI applications, possible risk aggregations need to be considered and recorded. This means that for each AI application it is necessary to check whether the input data was determined by AI and what errors may arise as a result. This could be the case, for example, if a customer’s creditworthiness was determined using artificial intelligence in an AI-supported lending process. Any inaccuracy in determining creditworthiness can subsequently lead to further inaccuracies and errors in the loan-granting process.

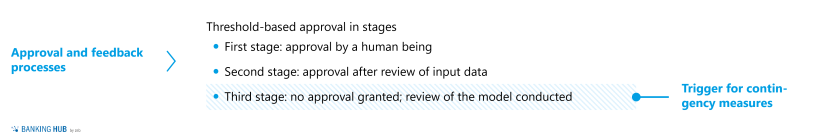

- Regardless of the business criticality of the application, the output of the AI application must be checked in terms of its correctness and interpretability. Such interpretability analyses (e.g. hiring of predominantly white male candidates due to bias) can identify errors or inaccuracies in the training data.

- By means of plausible interpretation that goes beyond mere approval processes, e.g. through a limit process, review processes of the AI applications can be triggered early on. For such a limit process, for example, the rate of detected fraud attempts can be used for a fraud detection application. If this rate changes over time, an interpretability check is triggered. A process adjustment to minimize fraud could explain the decline in such a rate. However, if no explanation for changed results can be found, a review of the entire algorithm, the input data and the defined parameters should be performed.

- Establishment of contingency measures in case of AI application failure for business-critical processes.

- Periodic validation of the application.

- Ad hoc validation, for example in case of frequent decision deviations, or new external or internal risks.

Banks must prepare for regulatory requirements

Artificial intelligence will continue to gain importance in the future. It offers immense opportunities for banking. However, the development and support as well as the implemented control measures for such solutions are of great relevance for the success of AI applications.

The damage that can result from faulty algorithms and insufficient control is difficult to quantify: it will not only be of a financial nature but also affect the reputation of financial institutions. Therefore, we can expect that supervisors will pay greater attention to the use of artificial intelligence in the future.

A systematic and timely evaluation of all regulatory initiatives at European and national level can be found in the Regulatory Hub provided by zeb. This includes the papers on AI published by BaFin and the EU.

What needs to be done?

zeb recommends that clients immediately start with an inventory of all data-driven processes to get an overview of potential AI solutions. In this way, dependencies between algorithms as well as business-critical applications can be identified early on.

The result of such an inventory is an AI application map that shows the interconnections and dependencies between AI applications and other types of application. We recommend the utilization of (predefined) use cases, such as those which have been not only developed by zeb for different areas (risk management, sales, lending processes, etc.), but also tested in practice.

For each AI application, it is important to define which of the principles required by the supervisory authorities are relevant to it and the extent to which it currently complies with them. It should be borne in mind that the principles leave room for interpretation for each use case. Therefore, a conservative approach is recommended, which interprets the principles in a rather narrow way in order to be adequately prepared for future supervisory audits.

In case of deviations or non-compliance with regulations, measures must be defined. For new AI applications to be developed, a process must be set up from the outset that ensures compliance with regulatory requirements even during development or before deployment.

In this context, the future regulations of the European (see EU AI Act[2]) and national supervisory authorities must be included by systematically monitoring the corresponding publications of the supervisory authorities.