Background

In the wake of the financial crisis, different regulatory requirements have been trying to further enhance the capacities of banks to cope with stress and crisis situations. The implementation of BCBS (Basel Committee on Banking Supervision) #239 principles is a significant regulatory requirement for effective risk data aggregation and risk reporting. They have to be implemented by all international systemically important institutions by January 2016. The implementation is recommended for domestic systemically important banks.

The BCBS #239 principles will entail comprehensive adjustments of the IT systems and processes and therefore require long implementation periods. The supervisory authority focuses especially on high-quality data warehouses.

Challenges of BSBC #239 for data quality

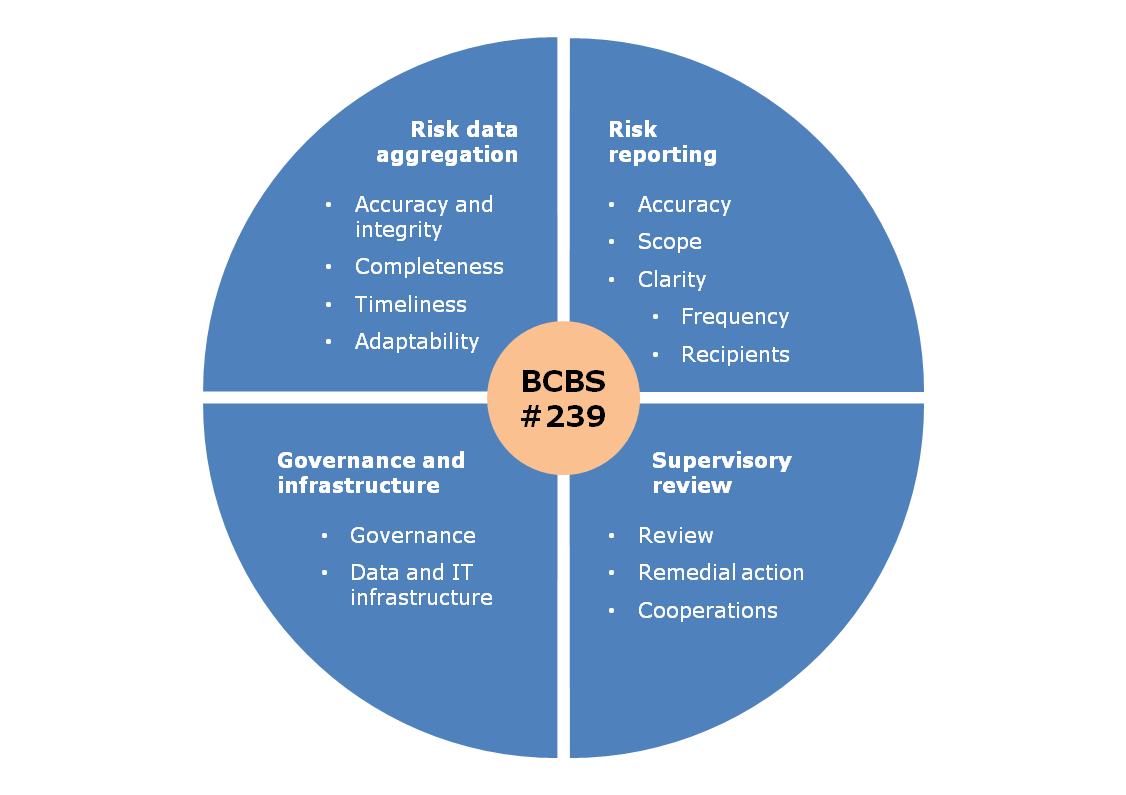

The BCBS rules significantly increase the current requirement towards financial and risk reporting. Bundesbank has already given an outlook for the planned regulatory interpretation of BCBS principles in various panel discussions. BCBS #239 can be divided into four topic fields: governance and infrastructure, risk data aggregation, risk reporting and supervisory review

A requirement for IT infrastructure for a standardized data basis can be derived from the governance and infrastructure topic field. Therefore it is necessary to remove the blinders of individual business units and to generate harmonized and comprehensive data. This demands data and methods across all risk types

The risk data aggregation and risk reporting topic fields are inextricably intertwined, since adequate risk reporting is only possible with a high-quality data basis. Risk data aggregation is characterized by four principles. First, the precision and integration of data is to be guaranteed. Thus, manual entries have to be monitored and an automated comparison of risk data with other data, in particular financial reporting data, must be feasible. Furthermore, the principle of current data is to be fulfilled. The risk report must be presented within ten working days after the end of month, based on checked data. Moreover, the bank has to be able to promptly establish critical risk data in crisis situations. The principle of adaptability is worth mentioning in this context. Data is to be generated ad hoc in a flexible and scalable manner. In order to ensure this, it is indispensable to have a mostly automated calculation and aggregation of all KPIs. Only this makes the setup of ad hoc reports according to any assessment criteria based on checked data possible. The last principle of risk data aggregation is completeness, that means the identification and collection of all relevant risk potentials.

The requirements towards risk reporting are characterized by five principles. The precision principle can only be realized with the help of an end-to-end consideration from the feeder system to the report. Data flow charts are to make the data stream across systems and manual interfaces comprehensible and transparent. This is the only way to ensure a measurement of data quality within the entire process. Risk reporting requires, like risk data aggregation, a coverage of all significant risks. The principles of comprehensibility and suitability for the target demand easy readability of the risk report and an adjustment towards the recipient needs in order to support sound decision making. Finally there are requirements regarding the frequency of risk reports. The frequency must be adapted to the risks and the setup of reports must be as fast as possible.

The requirements for the supervisory review won’t be discussed further, since they don’t pose additional requirements towards data quality.

Solution approach

The implementation of the aforementioned regulatory requirements isn’t feasible in many institutions with the current data basis. Therefore, measuring and continuously improving data quality are of paramount importance. Transparency and integrity as well as consistency of data are significant challenges for data quality.

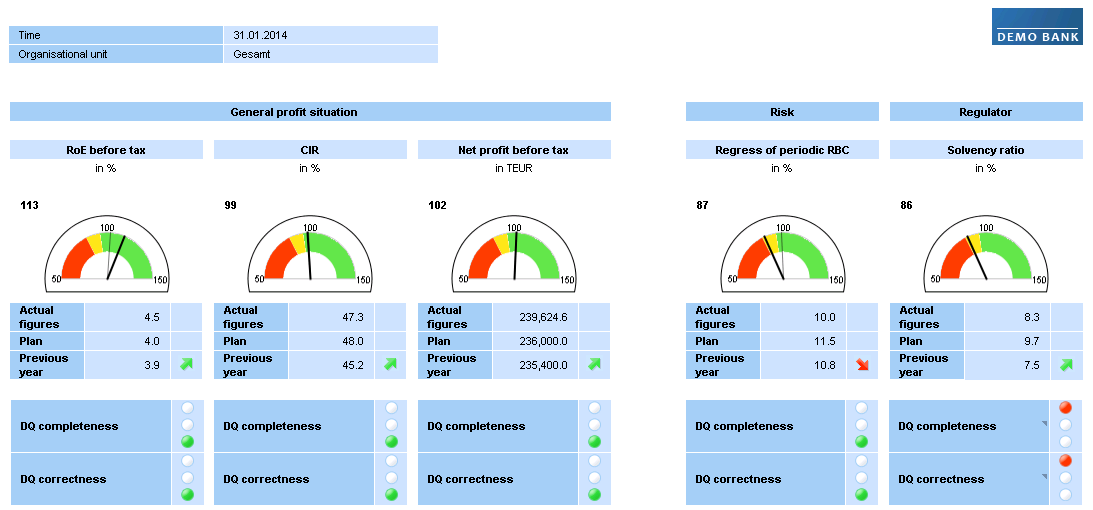

Data quality has a major impact on reporting results. Thus, reports should contain—in addition to technical KPIs—also KPIs for data quality. This is the only way to create transparency for decision makers about this topic, which has been neglected so far. At the same time, the significance and validity of the technical KPIs are assessed. The KPIs for data quality enable the first steps of the analysis and should shed light on data completeness and correctness. Good data quality is characterized by a complete collection of all relevant data as well as correct, that means bug-free, data.

If poor data quality has a measurable impact on relevant KPIs, a root cause and impact analysis based on delivery systems of the affected data will be required. The aim of the analysis is to identify the specific cause and process of data problems as well as to estimate the impact on relevant economic KPIs in order to be able to suggest and prioritize appropriate adjustment measures. A rule-based examination of data quality with a root cause and impact analysis allows for ensuring the required comprehensibility of data streams incl. calculation steps on aggregated level. Examining data quality and corrections must be able at different process stages—regardless of the respective used technology.

A detailed consideration should be made in case of insufficient data completeness in order to identify relevant source systems, data transfer channels and manual interfaces.

Close cooperation of the Finance and Risk unit is necessary for setting up a shared data source. Thus, compatibility of different evaluations and the complete identification of all significant risk potentials are guaranteed. Apart from a cross-departmental solution between Finance and Risk, close cooperation between the subject divisions and IT is also important. In addition to understanding the system and data landscape, in-depth technical knowledge is necessary for a successful implementation of the developed target image.

Opportunity

If the bank succeeds in fulfilling the strict requirements for current, consistent and available data, it will profit from economic benefits. It will be able to manage risks more efficiently and sustainably thanks to an improved aggregation of risk data and an increased quality of risk reporting. By integrating KPIs for data quality into management reports, it can be ensured that the management has a more reliable information basis for analyzing and preparing strategic decisions. This prevents serious mistakes which result from missing or wrong data.